Spatial Interactions

Project: Create // Role: Lead Designer

Designing Interactions for Spatial Computing

One of the biggest challenges when developing content for a new platform is understanding the medium you’re designing for, not just from a theoretical standpoint, but from a practical, hands-on approach. Spending time prototyping and iterating in Spatial Computing helped us gain an invaluable amount of insight regarding design conceits in this new space, it also provided us with the opportunity to observe user behavior and understand their expectations when it came to how they wanted to interact with the experience.

To bridge the gap between users and the system it was imperative for us to design interactions that were accessible, intuitive, and usable by as many people as possible. More importantly, when introducing users to a new platform, interactions need to feel authentic to the medium, natural and effortless.

Project: Create became an ideal test environment to prototype, iterate and evaluate interaction design, it presented users with a variety of tools to affect and manipulate virtual objects inside a Spatial Computing physics playground. A lot of the design work we did transcended the experience and became foundational to the OS and multiple other experiences.

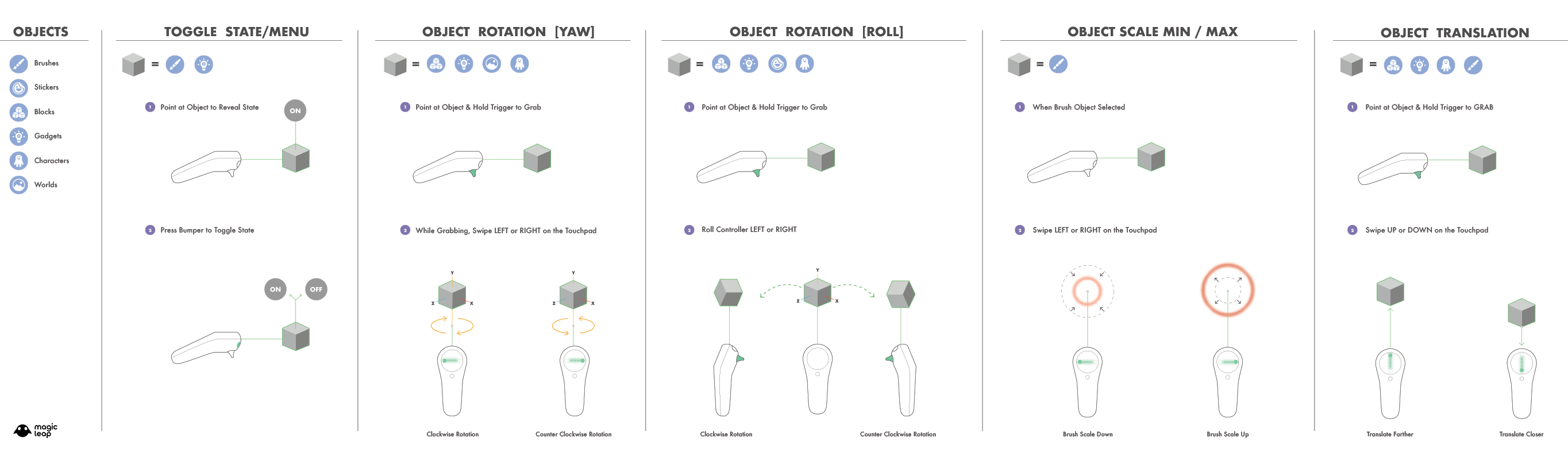

We started our discovery process by researching and evaluating inputs in existing XR platforms, even though our peripherals were still being designed we had to begin prototyping with the little insight we had regarding final inputs and features supported by the ML controller:

- Three-button inputs (Trigger, Bumper, Home)

- 6dof magnetic tracking

- Capacitive radial surface with an LED ring.

Unlike most VR hardware at the time, which featured dual controls, the Magic Leap was targeting a single ambidextrous controller at launch. Knowing our constraints and affordances, allowed us to draw a box to frame our design thinking as we mapped contextual input schemes corresponding to the tools and actions of the experience.

Tools and Action Mappings

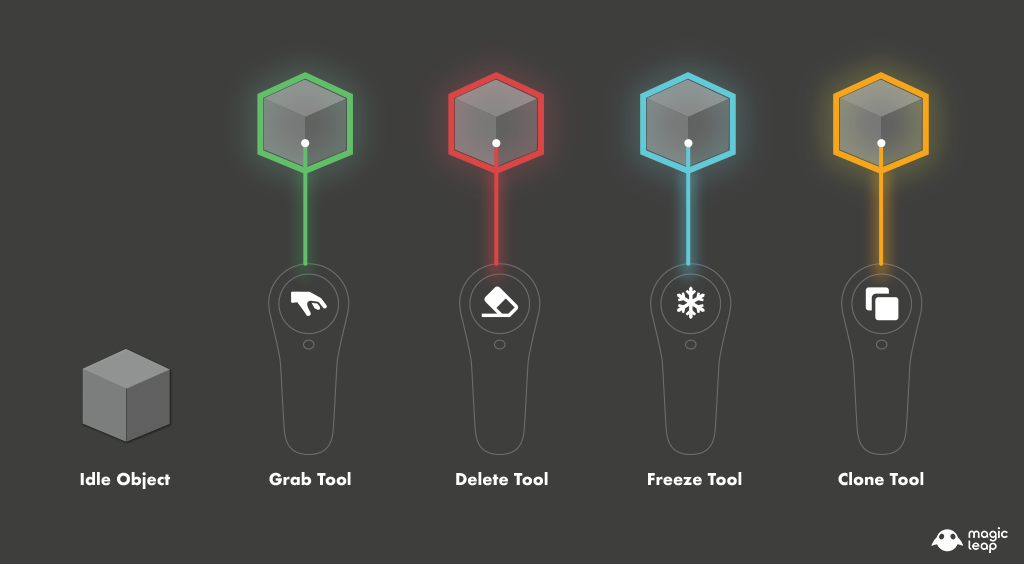

Once we had a clear target for what the final controller peripheral inputs, form factor, and features were, we started the process of mapping inputs to the controller that corresponded to the experience’s tools and actions. Project: Create provides users with the following tools to interact with the virtual content:

- Grab – Used to select and grab content.

- Delete – Used to to remove instances of a spawned object.

- Freeze – Used to disable/ enable gravity on physics enabled objects.

- Clone – Used to quickly generate copies of an instance that has been spawned.

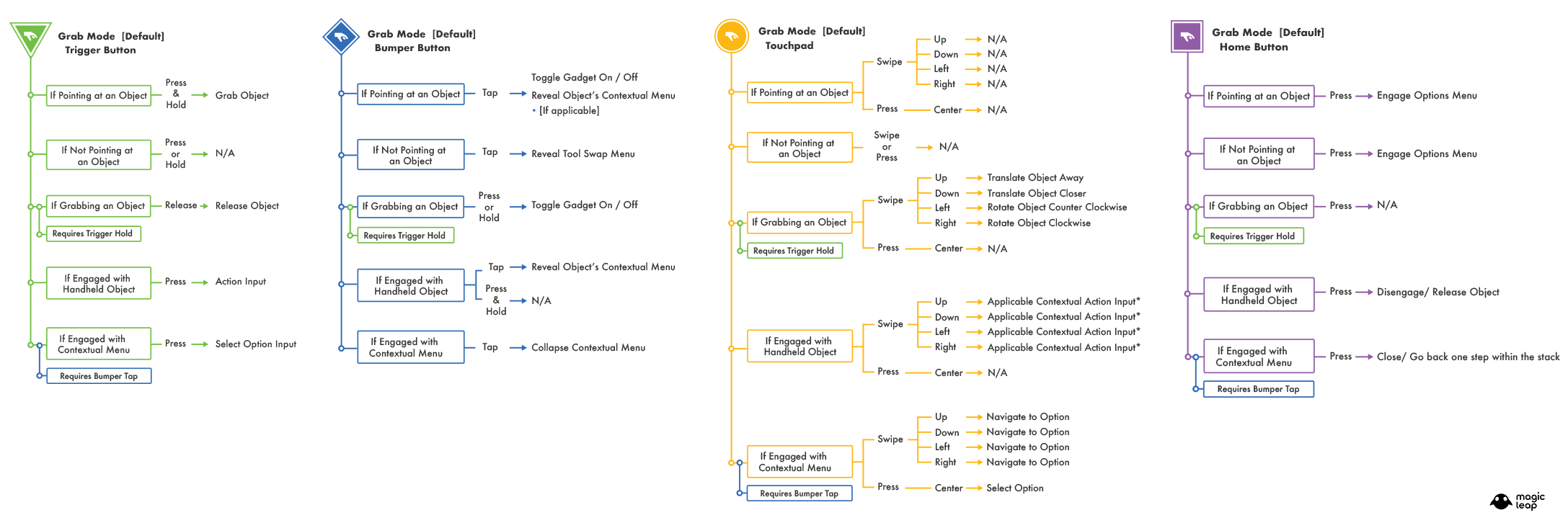

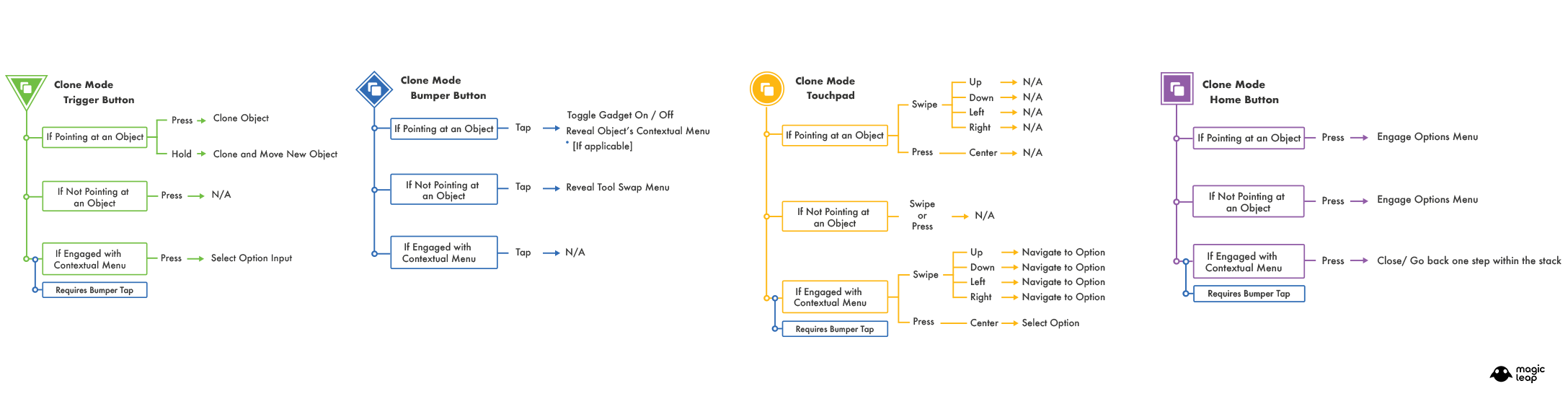

The following images outline the final input schemes for each tool, which are indicated by the icon on the top left for each section. The color coding corresponds to each individual input: Trigger, Bumper, Touchpad, and Home. The logic follows a visual representation of “if statements”, followed by the input state and the resulting interaction.

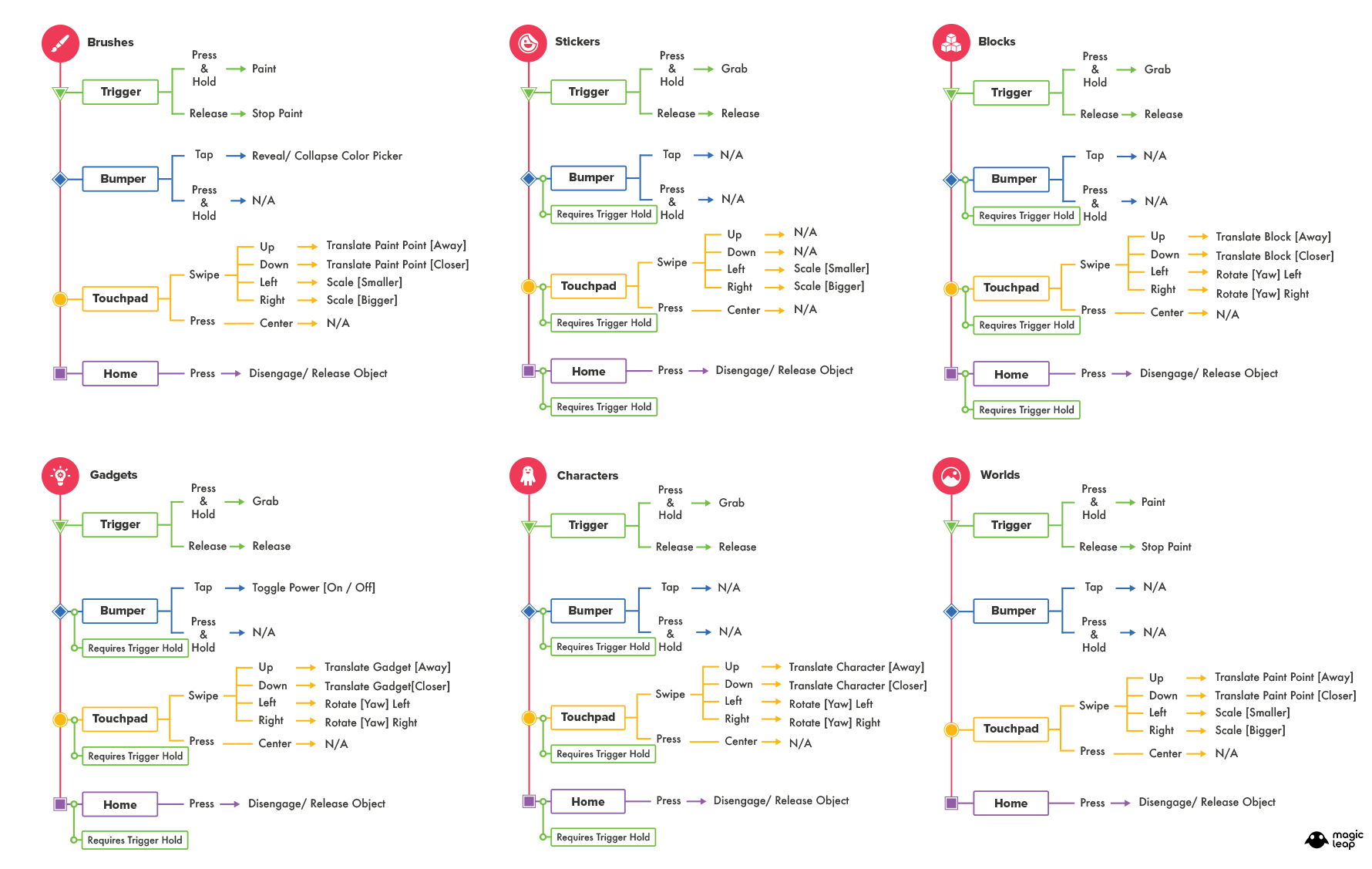

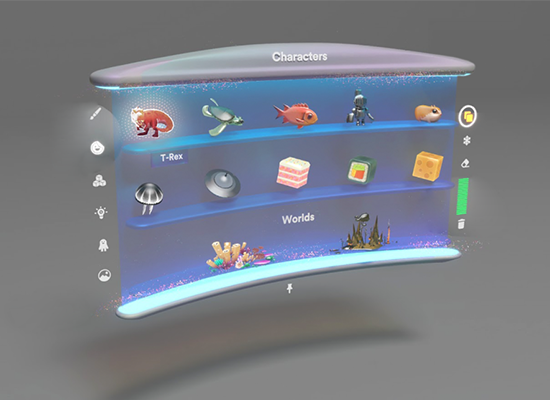

Achieving input consistency for the tools system represented a significant milestone, but we still had to define a more granular level of interactions that were unique to the instantiated objects from the content menu, which were divided into six categories based on function and properties:

- Brushes – Controller centric objects that enable self expression.

- Stickers – Surface dependent objects that enable decoration.

- Blocks – Physics objects that enable modular building via proximity snapping.

- Gadgets – Physics objects with binary states that enable building and tinkering.

- Characters – Autonomous objects with pathing and navigation that enable emergent interactions.

- Worlds – Surface dependent objects that enable environmental context.

The following image outlines the input schemes and interaction corresponding to each object’s category:

Visual Feedback

When dealing with a limited FOV (Field of View) to render the virtual content, it’s critical to consider what the user is able to see through the headset at all times during the experience.

The ray-cast laser system provided a clear visual representation for the targeting distance between the controller and any mapped surfaces or digital objects. But we also had to consider a way to provide visual clarity for input modalities, which changed depending on the currently selected tool.

To solve this, we designed a color coding system that dynamically updated the ray-cast color whenever the user changed the selected tool: Grab, Delete, Freeze, and Clone. Additionally, a highlight effect was displayed around any virtual object that the ray-cast would hit, and when the user pressed a button haptic feedback, audio and VFX would get triggered to provide reinforcements and input confirmation.

GIZMOS

One last thing we had to figure out was how to show transforms (translation, rotation, scale) on intangible virtual objects, which also implied physical properties analog to real world objects. We started by researching several 3D modeling packages to gain valuable insights into how they approached this problem.

The following solutions were designed to merge transform gizmos and game physics applied to virtual objects, which were displayed contextually:

- Positional Locator – A line displayed from the center of an actively held object, which would extend onto the nearest horizontal surface. The positional locator supplemented for the lack of shadows and provided a sense of location relative to the user’s physical space.

- Rotational Indicator – A set of arrows displayed whenever an object was being actively rotated on the Y axis (yaw). This aided with a sense of rotational direction for multiple objects.

- Scale Min/Max Indicator –A bounding box displayed around scalable objects, which displayed a color gradient (green to red) to visualize the whenever the user had reached the minimum and/or maximum threshold for the scale parameter.

- Raycast Tension – To imply an object’s mass and velocity when being actively held by the user, we added a tension parameter to the the raycast laser, this helped make the virtual objects feel more physical and playful as users shook the controller while holding them.

- Angular Mass – All physics objects were assigned a mass value, which allowed the system to simulate the illusion of inertia whenever a user would grab said object and translate it in space at a set velocity.

Learnings

The following are some of the interaction design learnings we accrued during the development of Magic Leap’s first immersive interactive experience, Project: Create.

1. The User Is The Camera – Traditional video games and digital media are usually presented through screens which provide real time feedback based on user driven inputs. To render the content, a virtual camera is often parented as it follows a user-controlled character moving around and interacting with the virtual world, this method has afforded developers with a number of tricks to guide or misdirect the user’s attention, as well as frame character performance for cinematic sequences.

In Spatial Computing, camera control is out of the equation, developers cannot program a user or instruct them to execute their commands, at least not yet. Therefore a key consideration when designing for Spatial Computing is always thinking about the user holistically, this requires thinking past preconceived paradigms from 2D screens/ interfaces and adopting design heuristics from other fields such as architecture, theatrical staging, and industrial design. Keeping an open mind makes the discovery process more enjoyable, this is still a relatively new space to design for, we are all still learning, and that is OK!

2. Minimize Workload – When designing interactions for spatial computing consider the user’s physical and cognitive load. This is a basic usability heuristic, but it’s imperative to remember that every design choice comes with a cost to the user which can negatively impact their experience.

Design flexible input systems that account for comfort and usability, consider the physical exertion required to execute all interactions, and remember that some users may prefer to stand while others will prefer to sit, both use cases deserve and expect to have the same experience.

Introduce new concepts progressively and contextualize all relevant information to avoid choice paralysis and cognitive overload. Allow the users to advance at their own pace and always account for error prevention systems, the less time a user spends worrying about about making a mistake the more they can immerse themselves in the experience.

Copyright 2023 - Javier Busto