Hand based Interaction Design

Project: The Last Light // Role: Lead Designer

Hand-Based Interaction Design

The Last Light featured end-to-end hand inputs, which gave the team the opportunity to design embodied interactions for the first time in an immersive application.

Designing for hand input presented several challenges from a user experience standpoint, we had to work against major preconceptions when presenting users with skeuomorphic objects, which they already knew how to interact with in the physical world. Not being able to perceive haptic/ tactile feedback when manipulating a digital analogs was also a major problem. We were also working against hardware limitations such as a camera FOV (Field of View) and render clip plan, which constrained the area where these interactions could happen.

We knew that all of these problems would dissuade creators to commit their time to develop input systems and solutions, which was one of the main reasons why I wanted to push in favor of designing an experience that could show what was possible on the Magic Leap One and how to do it in a user-friendly way.

The main focus of the input system was focused around ease of interaction, which was actually rather complex to achieve. To accomplish this we evaluated designs under a set of basic usability heuristics:

- Signs and Feedback

- Clarity

- Form Follows Function

- Consistency

- Minimum Workload

- Error prevention and recovery

- Flexibility

First Contact

We observed that very few people would come into the experience knowing how to use hand inputs in Spatial Computing, so we had to make sure we presented the first hand-based interaction in such a way where they did not feel stressed or distracted. This “first contact” with a tangible virtual object was presented during the Start “screen”, it was a button which users would have push with their hand to activate.

The interaction model remained consistent throughout the entire experience, and it took several iterations to identify the right size and form for menu buttons, which would implicitly communicate their affordances to the users. We ended up building buttons that were around the size of a fist, this made it easier for users to utilize their whole hand as they reached out to touch them, and also gave the system more tracking points (fingers) to interpret the input.

Visual and audio feedback were critical components for communicating button states to the user, just like one would expect real time feedback when operating a 2D cursor on a screen as it hovers on top of interactive elements, we knew we needed to display information for the user to understand that their hands were not only being recognized but were also able to interact with the virtual content.

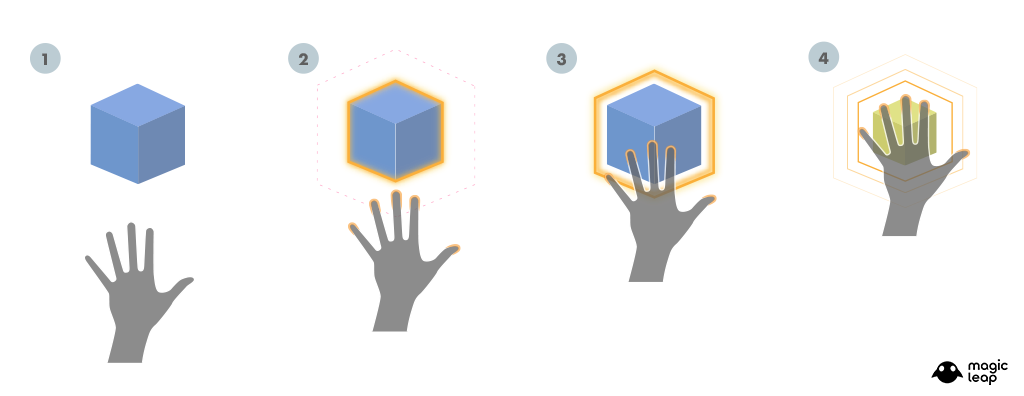

The image bellow is a breakdown for the button push interaction, with each button state enumerated accordingly:

- Idle

- Approach

- Contact

- Depressed

We had to be very mindful about the complexity and ambiguity these gestures introduced for such a naturalistic interaction method that humans have been using instinctively for thousands of years Constraining the number of gestures needed to interact with both, virtual buttons and objects allowed us to present a scheme that was easy to learn, recall and execute.

Secondary Hand Inputs

Secondary inputs without a control peripheral became an obvious challenge to solve during the interaction design process, we knew that the system was capable of recognizing explicit hand gestures but these weren’t intuitive to users nor were there any standards that defined how to approach mapping peripheral inputs onto a hand.

There were two main gestures (open hand and fist) that were used to interact with menus and objects, we then identified that we could use an open palm gesture as a variant to instigate the options menu. This meant that all of the experience could be easily used with a single hand and only two unique hand poses, instead of relying on gimmicky gestural controls.

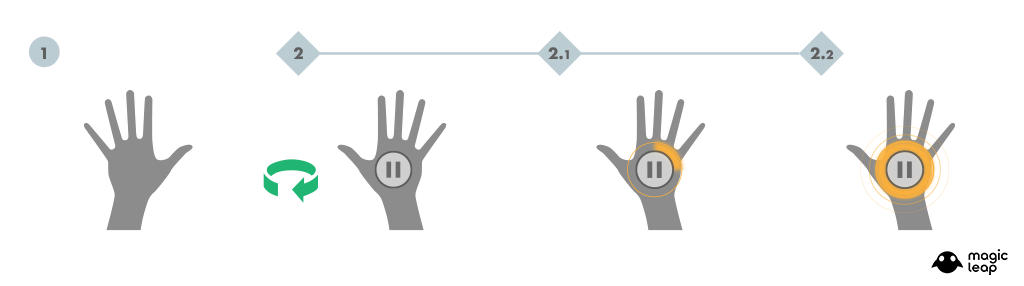

Since all interactions were designed in such a way that users would have to move their hands away from their bodies in order reach virtual buttons or objects that were placed in front of them, which allowed us to leverage a simple motion of turning the hand as a method to display the Pause Menu.

Several constraints were built into the system to prevent accidental instigation, among them was the implementation of a timer to ensure that the user was doing this gesture purposely. Upon detecting the palm gesture, the system would immediately display a pause icon adhered to the user’s palm with a radial throbber to signal the time required to display the menu; in case users changed their mind they would simply flip their hand to cancel out this interaction.

The next challenge was figuring out how to operate and decouple a menu that was generated from a user’s hand. We tested different approaches, some of which required users to keep their palm in view while interacting with the floating buttons using their other hand. We also tried placing buttons directly on the user’s fingertips to provide tactile feedback, but ultimately decided to remove the dependency of maintaining the instigating hand in view, which relieved physical exertion and afforded a consistent interaction model to display, navigate and operate the pause menu.

Skeuomorphic Virtual Object Manipulation

The Last Light features several interactive events which are tied into the main story, these are presented as skeuomorphic real-life scale objects, which the user can manipulate in real time.

Having to interact with virtual objects using hands as the input introduced cognitive dissonance, which often elicited negative feelings because of the user’s inability to manipulate the objects as they would in their everyday life. There is so much sensory feedback that happens in real life which we take for granted, because once we’ve learned how to use something our brains can quickly retrieve that information and execute the process in autopilot.

Skeuomorphic virtual objects imply identical affordances to their physical analogs, however, they lack most of the physical properties that would normally inform users about how to naturally interact with them. For example – the lack of physical contact prevents sensory perception of an object’s textures, the lack of mass means that users aren’t able to perceive the object’s weight as they move it around, there’s also no gravitational pull effected on the virtual object among many other factors…

In order to provide a usable interaction method we had to account for all the missing feedback and provide alternatives to visually and sonically communicate the state of an object clearly and consistently.

We observed that the way in which users expected to interact with these objects was very subjective, for example: not everyone grabbed a virtual key the same way, and the articulation of the wrist and fingers would be heavily influenced by the distance and angle in which the object was displayed relative to the user and their hand.

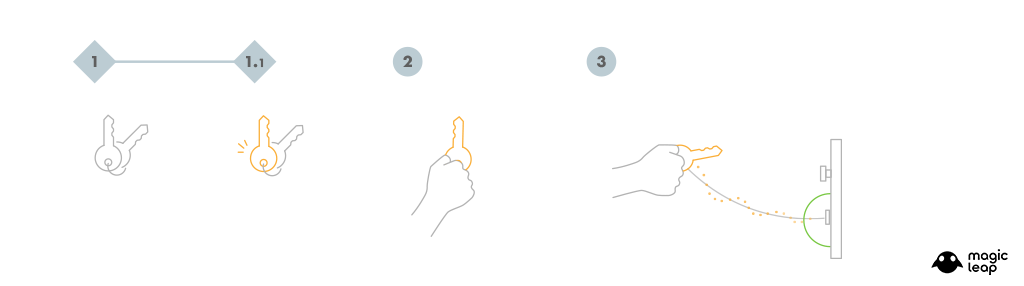

Knowing that we couldn’t control how a user would execute an interaction allowed us to develop a flexible system that accounted for error prevention and recovery. At its core, we knew that all interactions could be broken up into three basic steps:

- Affordance Signaling

- Object Activation

- Translation Towards Target

The videos bellow are two examples of the final implementation for skeuomorphic object hand based interactions.

Copyright 2023 - Javier Busto